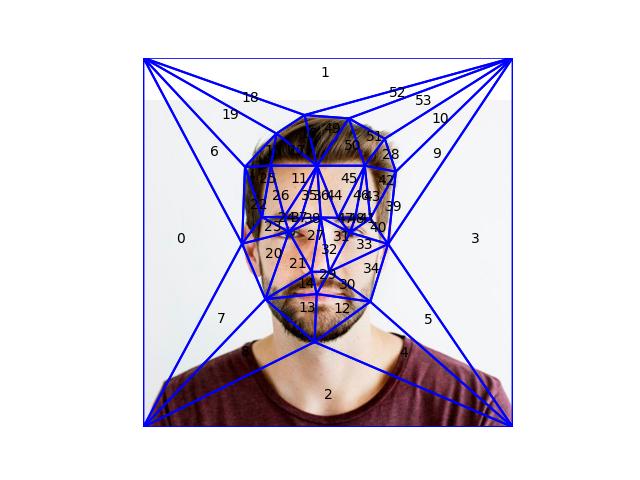

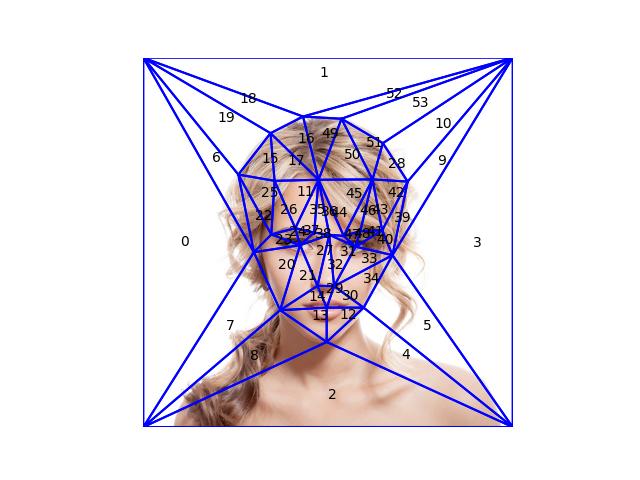

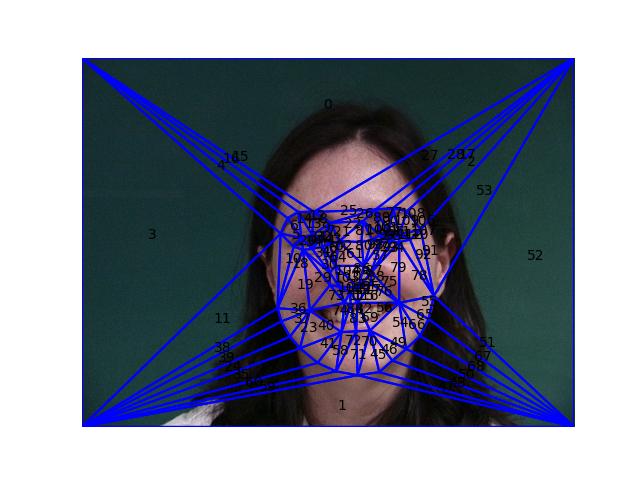

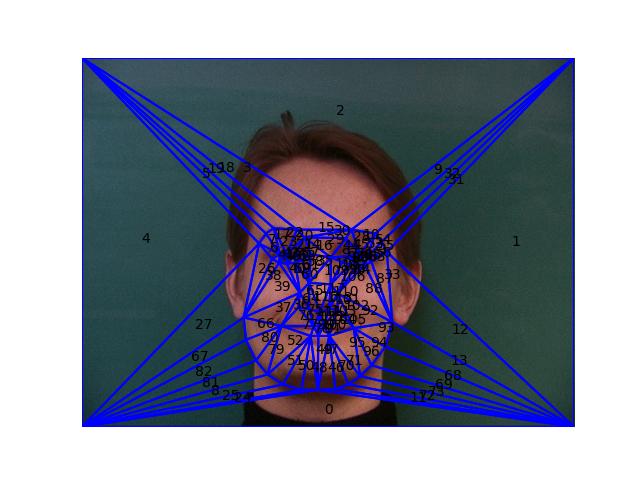

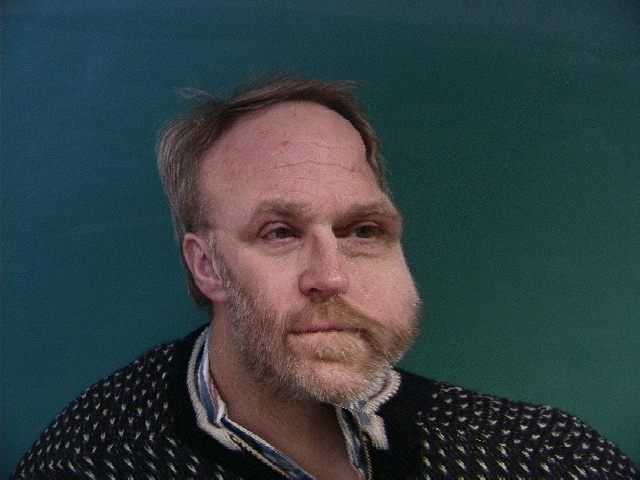

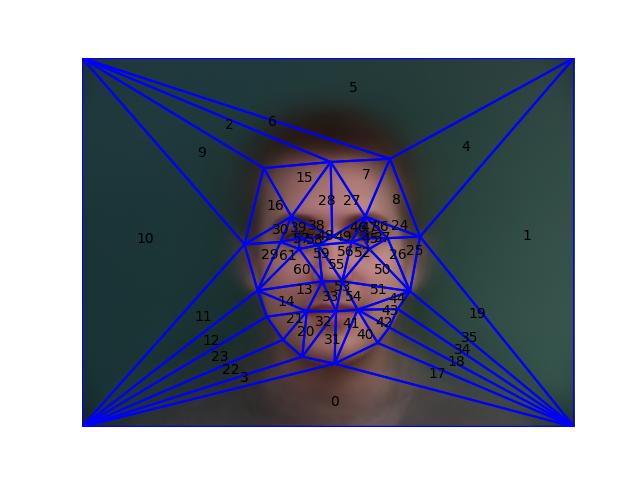

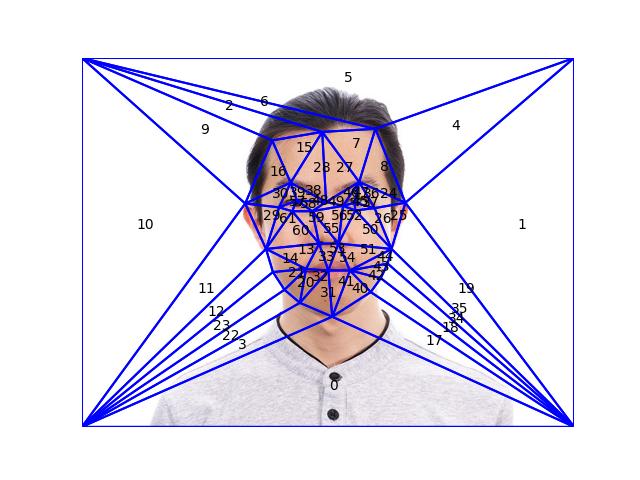

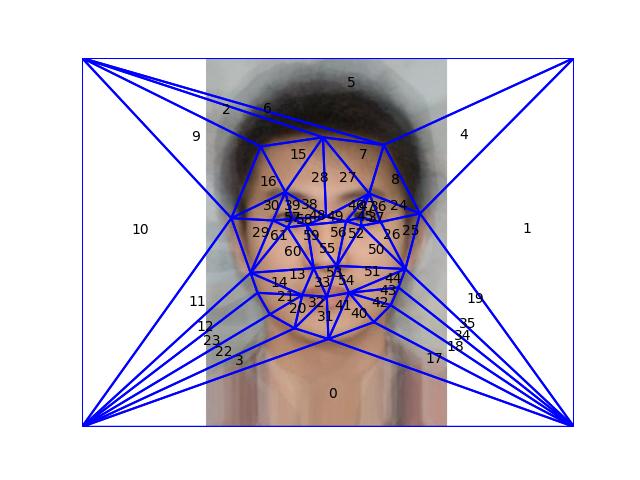

Part 1. Defining Correspondences¶

The correspondences have been defined using the

label_img.py script, and we compute the Delaunay

triangulation in this section. After adding the four corner points

to ensure that the triangulation covers the entire image, we

compute the triangular-mesh using Delaunay from

scipy. Below we visualize the generated mesh overlaid

on the images. The labels are the order of the triangles in the

list, this is useful to check for any discrepencies in the mesh

correspondence between the images so the morphing is more natural.